SEOUL — Sixteen companies at the forefront of developing artificial intelligence pledged on Tuesday at a global meeting to develop the technology safely at a time when regulators are scrambling to keep up with rapid innovation and emerging risks.The companies included US leaders Google, Meta, Microsoft and OpenAI, as well as firms from China, South Korea and the United Arab Emirates.

They committed to publishing safety frameworks for measuring risks, to avoid models where risks could not be sufficiently mitigated, and to ensure governance and transparency."It's vital to get international agreement on the 'red lines' where AI development would become unacceptably dangerous to public safety," said Beth Barnes, founder of METR, a group promoting AI model safety, in response to the declaration.

Россия Последние новости, Россия Последние новости

Similar News:Вы также можете прочитать подобные новости, которые мы собрали из других источников новостей

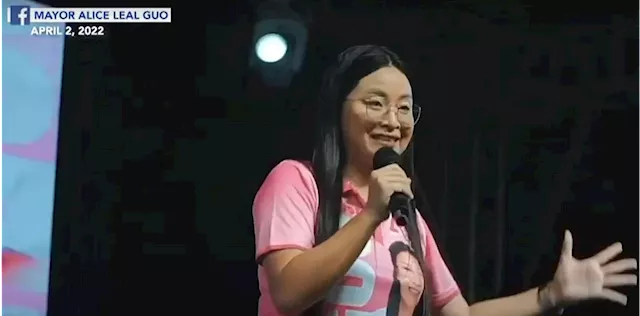

Hontiveros flags Bamban Mayor Guo's alleged business ties with foreign criminalsLatest Philippine news from GMA News and 24 Oras. News, weather updates and livestreaming on Philippine politics, regions, showbiz, lifestyle, science and tech.

Hontiveros flags Bamban Mayor Guo's alleged business ties with foreign criminalsLatest Philippine news from GMA News and 24 Oras. News, weather updates and livestreaming on Philippine politics, regions, showbiz, lifestyle, science and tech.

Прочитайте больше »